TL;DR

- Lead scoring quantifies prospect quality through weighted point assignments based on demographic fit, behavioral signals, and attribution data, enabling sales teams to prioritize contacts with 70-90% efficiency rates compared to random follow-up approaches.

- Organizations implementing systematic scoring models achieve 18-35% MQL-to-SQL conversion rates versus 10-15% without qualification frameworks, with top performers reaching 40%+ through predictive machine learning models that analyze historical conversion patterns.

- Attribution integration determines scoring accuracy—leads from high-intent channels (demo requests, pricing pages) warrant +50 points while low-intent touchpoints (blog visits) merit +5-10, making source data integration critical for threshold calibration and revenue prediction.

What Is Lead Scoring?

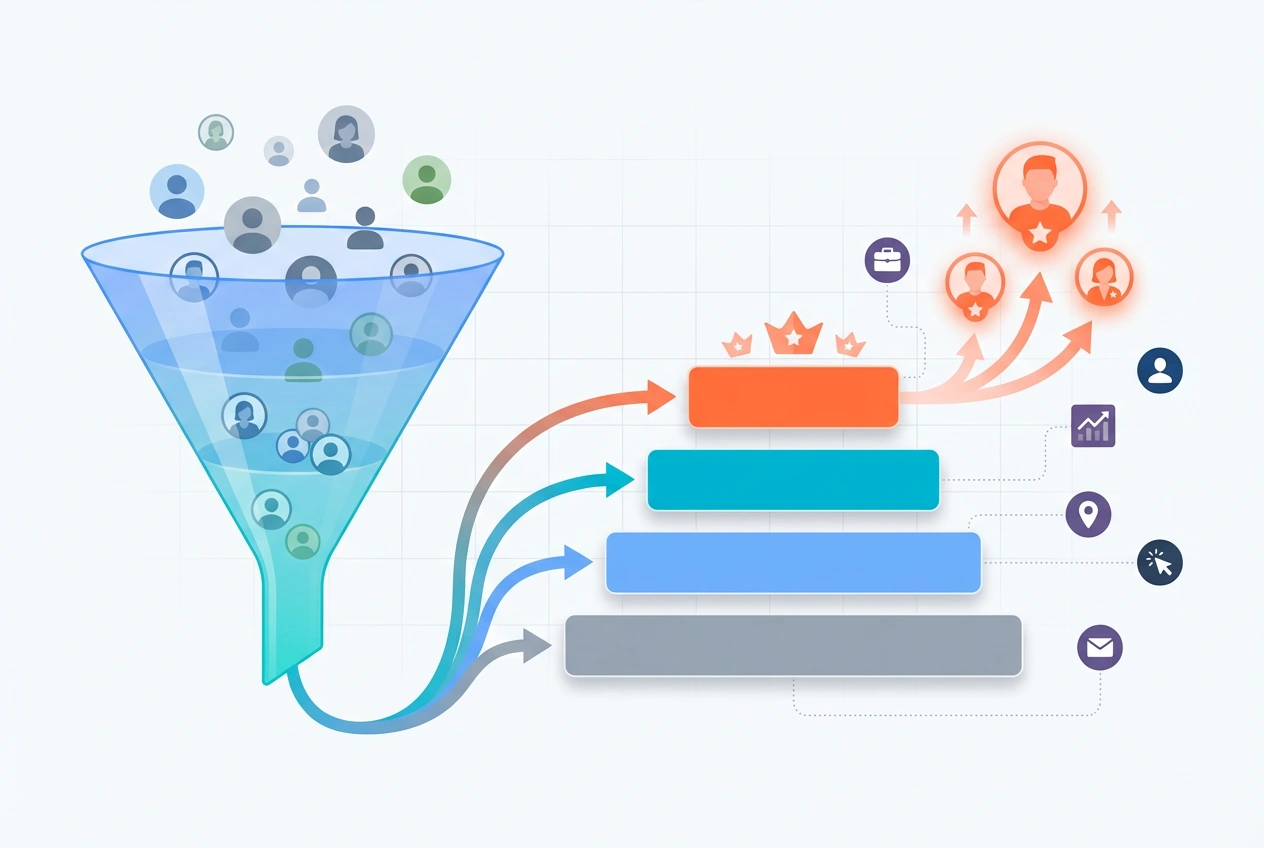

Lead scoring is a quantitative framework assigning numerical values to prospects based on explicit attributes (job title, company size, industry) and implicit behaviors (content engagement, page visits, email responses) that predict conversion likelihood.

The methodology transforms subjective qualification into objective prioritization. Sales teams operating without scores chase every lead equally, wasting cycles on unqualified contacts while hot prospects cool.

Scoring creates hierarchy. A VP at a Fortune 500 company who downloaded three case studies and visited the pricing page five times earns a score of 85. An intern at a 10-person startup who bounced from the homepage scores 15.

The threshold separates MQLs from raw leads. Set the bar at 50 points, and only qualified prospects trigger sales alerts and automated sequences.

Modern implementations integrate attribution data as scoring inputs. The channel that delivered the lead—paid search keyword, content piece, referral partner—influences the initial score because source predicts intent.

A demo request from paid brand search (high intent, +75 points) differs fundamentally from a newsletter signup via organic blog traffic (awareness stage, +10 points). Attribution-integrated scoring reflects this reality.

Test LeadSources today. Enter your email below and receive a lead source report showing all the lead source data we track—exactly what you’d see for every lead tracked in your LeadSources account.

Scoring Model Architecture

Effective models balance three data dimensions.

Demographic and Firmographic Scoring evaluates ICP fit before engagement analysis. B2B SaaS targeting enterprises might assign:

- Company size 1,000+ employees: +20 points

- Director-level or above: +15 points

- Target industry (Technology, Financial Services): +15 points

- Budget authority indicator: +10 points

- Geographic match (US, UK, Canada): +10 points

These static attributes establish baseline fit. A perfect demographic match earns 70 points before any behavioral activity.

Conversely, negative scoring filters misfit prospects. Competitors researching your product receive -50 points. Students and personal email addresses get -30. Geographic mismatches where you don’t operate subtract -20.

Behavioral and Engagement Scoring tracks prospect actions indicating purchase intent:

- Pricing page visit: +20 points

- Case study download: +15 points

- Product demo request: +75 points (immediate MQL)

- ROI calculator usage: +25 points

- Email link clicks: +5 points per click

- Webinar attendance: +30 points

- Integration page views: +15 points

Behavioral scoring accumulates over time. A prospect visiting your site six times over three weeks builds score progressively, reflecting sustained interest versus casual browsing.

Negative behavioral signals include email unsubscribes (-20 points), extended inactivity beyond 90 days (-30 points), and spam complaints (-100 points, permanent disqualification).

Attribution and Source Scoring incorporates channel quality into initial qualification. Not all leads equal—source predicts conversion probability.

Attribution-based point assignments:

- Direct demo request: +75 points

- Paid brand search conversion: +50 points

- Organic search (bottom-funnel keywords): +40 points

- Partner referral: +45 points

- Content syndication lead: +15 points

- Trade show badge scan: +20 points

- Paid social ad click: +10 points

This layer prevents high-volume, low-quality channels from flooding sales with unqualified contacts. A lead’s origination channel becomes a permanent scoring component reflecting statistical conversion likelihood.

Predictive vs. Rules-Based Scoring Models

Two methodologies dominate implementation approaches.

Rules-Based Scoring applies manual point assignments determined by marketing and sales collaboration. Teams analyze closed-won deals, identify common attributes, and weight factors accordingly.

The advantage: complete control and transparency. Everyone understands why a specific action earns specific points.

The limitation: static models don’t adapt to changing buyer behavior without manual updates. Rules reflect past assumptions rather than real-time conversion patterns.

Implementation requires defining 20-40 scoring rules across demographic, behavioral, and negative categories. Annual reviews adjust point values based on conversion analysis.

Predictive Machine Learning Models analyze historical lead data to identify conversion patterns algorithmically. The system ingests thousands of data points—demographics, behaviors, engagement timing, content consumption, attribution sources—and determines which combinations predict closes.

Machine learning models recalibrate continuously as new conversion data accumulates. A previously high-scoring behavior that stops correlating with closes automatically loses weight.

The predictive advantage: accuracy improvement over time as training data expands. Organizations report 15-30% higher MQL-to-SQL conversion with predictive versus rules-based approaches.

The implementation barrier: requires minimum 1,000-2,000 historical conversions for statistically significant training data. Smaller organizations lack sufficient volume for reliable model training.

MQL Threshold Calibration

Score thresholds determine when leads qualify for sales engagement. Set too low, and sales wastes time on unready prospects. Set too high, and qualified buyers go unconverted.

The calibration process requires analyzing conversion rates across score bands.

Example analysis showing leads closed-won by score range:

- 0-25 points: 2% conversion rate

- 26-50 points: 8% conversion rate

- 51-75 points: 22% conversion rate

- 76-100 points: 45% conversion rate

- 100+ points: 67% conversion rate

This data reveals natural breakpoints. The jump from 22% to 45% conversion between 75 and 76 points suggests setting the MQL threshold at 76.

Threshold optimization balances two metrics: lead volume sales can handle and conversion efficiency justifying sales effort. A threshold generating 500 MQLs monthly with 45% conversion performs better than 1,000 MQLs at 15% conversion—fewer touches, higher win rates.

Dynamic threshold adjustment responds to pipeline coverage needs. If sales capacity increases or pipeline thins, lower the threshold temporarily to generate more volume. When pipeline overflows, raise standards to focus on highest-probability conversions.

Attribution Data Integration Requirements

Scoring accuracy depends on complete journey data flowing from attribution platforms to scoring systems.

Source Field Population enables channel-based scoring. When leads enter the CRM, their originating source, campaign, content, and touchpoint sequence must populate automatically.

Without attribution integration, all leads default to “Unknown” or “Direct” sources, eliminating the ability to weight source quality into scores. This gap typically undervalues 30-50% of qualified leads from high-performing channels.

Multi-Touch Journey Data provides scoring context beyond first touch. A lead who discovered you through paid search, engaged with three content pieces, and returned via organic search demonstrates higher intent than a single-visit lead.

Scoring systems reward multi-session engagement. Each subsequent visit within 30 days adds incremental points (first visit +10, second +15, third +20). Journey depth indicates sustained interest.

Behavioral Event Tracking from attribution platforms feeds scoring engines with high-intent signals:

- Specific page views (pricing, features, integration)

- Time-on-site thresholds (5+ minutes indicates serious evaluation)

- Return visit frequency (3+ visits in 14 days)

- Content type engagement (bottom-funnel content weighted higher)

- UTM campaign alignment with buyer journey stage

Integration architecture determines whether these events auto-populate lead records in real-time or require manual batch imports. Real-time sync enables instant score updates and automated MQL routing.

Implementation Framework

Building effective scoring requires six sequential steps.

1. Analyze Closed-Won Attributes

Export 200+ closed-won deals and identify common patterns. Which job titles convert most? Which company sizes? Which industries? Which initial traffic sources?

Statistical correlation analysis reveals predictive attributes. If 78% of closes are VPs at 500+ employee companies from paid search, these factors warrant high point values.

2. Define Scoring Categories and Ranges

Establish point allocations across three categories:

- Demographic/Firmographic: 0-70 points (baseline fit)

- Behavioral/Engagement: 0-100 points (intent signals)

- Negative Scoring: -100 to 0 points (disqualification)

Total scores range 0-170+ with 75-point MQL threshold representing the top 25% of lead quality.

3. Assign Point Values

Weight behaviors by conversion correlation. Actions taken by 60%+ of closed deals receive highest points. Rare behaviors in closing patterns get lower weights.

Calibrate negative scoring to filter aggressively. Personal email domains immediately subtract enough points to prevent MQL status regardless of other behaviors.

4. Set MQL and SQL Thresholds

Define clear qualification tiers:

- 0-49: Raw lead (nurture track)

- 50-74: Engaged lead (targeted content)

- 75-99: MQL (sales development outreach)

- 100+: Hot MQL (immediate sales engagement)

SQL designation occurs when sales confirms MQL meets BANT criteria through direct conversation.

5. Integrate with CRM and Automation

Configure lead scoring rules in your marketing automation platform. Map scoring fields to CRM custom fields for sales visibility.

Build automation workflows:

- Score reaches 75: Alert assigned sales rep, add to outreach sequence

- Score exceeds 100: Create task for same-day contact attempt

- Score drops below 30: Remove from active pipeline, move to long-term nurture

6. Monitor and Optimize Quarterly

Track MQL-to-SQL conversion rates by score band. If 75-85 point leads convert at 18% while 85-95 converts at 44%, adjust the threshold upward.

Analyze which scoring factors correlate most strongly with closes. Increase point values for predictive behaviors, decrease weights for non-correlating actions.

Common Implementation Challenges

Insufficient Historical Data prevents statistical modeling. Organizations with fewer than 500 annual closes lack sufficient conversion data for reliable predictive scoring.

Solution: Start with rules-based scoring using best-practice frameworks from similar organizations. Transition to predictive models after accumulating 12-24 months of score-to-conversion data.

Sales-Marketing Misalignment occurs when marketing-defined MQLs don’t match sales’ qualification standards. Sales rejects 60%+ of MQLs as unqualified, creating friction.

Solution: Collaborate on scoring criteria using closed-won analysis both teams agree reflects reality. Sales input ensures threshold accuracy; marketing input prevents unrealistic standards that generate zero MQLs.

Attribution Data Gaps leave 40-60% of leads without source attribution, making channel-based scoring impossible.

Solution: Implement attribution tracking platform integration before deploying scoring. Without complete source data, you’re scoring with one hand tied—demographic and behavioral only, missing critical source quality signals.

Score Inflation Over Time happens when point values don’t adjust to behavioral changes. Actions that once predicted conversions lose correlation as buyer behavior evolves.

Solution: Schedule quarterly scoring audits analyzing point value effectiveness. Decrease weights for non-correlating behaviors, increase points for emerging high-intent signals.

Negative Scoring Gaps allow poor-fit prospects to accumulate high scores through behavioral engagement alone.

Solution: Implement aggressive negative scoring for disqualifying attributes. Competitors should never reach MQL status regardless of engagement. Personal email addresses should immediately subtract enough points to offset behavioral gains.

Scoring Performance Metrics

Five KPIs measure scoring model effectiveness.

MQL-to-SQL Conversion Rate

Calculation: SQLs ÷ MQLs × 100

Benchmark: 18-22% average, 25-35% top performers, 40%+ for B2B SaaS with mature predictive models.

This metric directly reflects scoring accuracy. High conversion rates indicate the threshold correctly identifies sales-ready prospects. Low rates (<15%) suggest the bar is set too low, flooding sales with unqualified contacts.

Lead Scoring Efficiency

Calculation: (MQLs Accepted by Sales ÷ Total MQLs) × 100

Target: 70-90% acceptance rate.

Sales rejection of marketing-generated MQLs signals scoring misalignment. If sales only accepts 50% of MQLs, the model weights behaviors that don’t actually predict qualification.

Time to MQL

Average days from first touch to MQL threshold crossing.

Insight: Short time-to-MQL (0-7 days) indicates high-intent lead sources. Extended periods (60+ days) suggest awareness-stage traffic requiring nurture.

This metric informs scoring strategy—should you weight early behaviors more heavily, or require sustained engagement over time?

Score Distribution Analysis

Percentage of database in each score band:

- 0-25: 45% (cold contacts)

- 26-50: 30% (engaged leads)

- 51-74: 15% (warm prospects)

- 75-99: 8% (MQLs)

- 100+: 2% (hot MQLs)

Healthy distribution shows most leads in low bands with progressively fewer in higher tiers. If 30% of your database scores as MQL, either your scoring is too generous or your targeting is remarkably precise.

Revenue per Score Band

Average deal size by score range when lead entered pipeline.

Analysis reveals whether high scores predict not just conversion but also deal value. If 100+ point leads close 2.5x larger deals than 75-85 point leads, prioritize top-tier contacts even more aggressively.

Advanced Optimization Tactics

Decay Mechanisms reduce scores for inactive leads. A prospect scoring 85 points but showing no engagement for 90 days shouldn’t remain an MQL.

Implement time-based decay: subtract 5 points monthly after 60 days of inactivity. A once-hot lead naturally drops from 85 to 60 over five months without new engagement.

Decay prevents sales from perpetually chasing prospects who’ve moved on. Fresh low-score leads with recent activity often convert better than stale high-score contacts.

Account-Level Scoring aggregates individual scores across multiple contacts at target accounts. ABM strategies require knowing overall account engagement, not just individual contact interest.

Account scoring sums individual scores, weights by seniority (executives count 3x, individual contributors 1x), and factors account-level firmographic fit.

An account with three engaged contacts—a VP (score 75 × 3 = 225), director (score 65 × 2 = 130), and manager (score 55 × 1 = 55)—achieves 410 aggregate points signaling enterprise deal potential.

Lifecycle Stage Integration adjusts scoring criteria based on funnel position. Awareness-stage leads require different behaviors for MQL status than consideration-stage prospects.

Early-stage leads might need 5+ content engagements over 60 days to score 75 points. Late-stage leads with a single demo request instantly hit 100+ points.

This prevents penalizing new prospects for not immediately showing purchase intent while correctly prioritizing bottom-funnel signals.

Source-Specific Thresholds recognize that different acquisition channels produce different baseline quality.

Paid brand search leads might need only 60 points for MQL status (high intent evident in search query). Content syndication leads require 85 points (broader awareness play).

This approach maintains lead quality standards while acknowledging source-dependent conversion probabilities.

Best Practices for Sustainable Scoring

Start Simple, Iterate Complex

Initial implementations should track 10-15 scoring factors, not 50. Complexity creates maintenance burden and reduces transparency.

Launch with demographic fit and three high-intent behaviors (demo request, pricing page, case study). Add sophistication based on 90 days of conversion data analysis.

Document Scoring Logic Transparently

Sales teams distrust black-box algorithms. Publish scoring criteria so reps understand why a lead scores 85 versus 45.

Transparency enables sales feedback. Reps reporting that high-scoring leads consistently fail qualification signals model recalibration needs.

Separate Scoring from Routing

Don’t automatically assign every MQL to sales. Implement a review layer where sales development qualifies MQLs before routing to account executives.

This buffer prevents bad scores from disrupting AE productivity while SDRs validate and provide feedback improving scoring accuracy.

Align Scoring with Sales Capacity

If sales can handle 100 new MQLs monthly, calibrate thresholds to generate 100-120 MQLs, not 300. Excess volume creates follow-up delays that harm conversion rates.

Better to set stringent standards ensuring rapid contact than generous scoring that overwhelms capacity.

Build Closed-Loop Feedback

Track which scored leads become SQLs, opportunities, and closed-won deals. Feed this outcome data back into the scoring model.

Quarterly reviews compare score bands to conversion rates, adjusting point values to improve predictive accuracy.

Frequently Asked Questions

How many data points should a lead scoring model include?

Start with 10-15 factors across demographic fit and behavioral intent. Mature models expand to 30-40 factors including negative scoring, attribution sources, and engagement timing.

Complexity requires maintenance. Each scoring rule needs periodic validation against conversion data. More factors improve accuracy if properly weighted, but diminishing returns occur beyond 40-50 criteria.

The key: focus on statistically significant predictors. If a behavior appears in 80% of closed deals, score it heavily. Rare behaviors that don’t correlate with conversions add noise without value.

What MQL threshold score should I set?

Analyze your lead database conversion rates by score band. Set the threshold where conversion probability justifies sales engagement effort.

If leads scoring 75+ convert at 30% while leads scoring 50-74 convert at 12%, a 75-point threshold makes sense. You’re prioritizing the segment 2.5x more likely to close.

Balance two constraints: sales capacity (how many MQLs can they handle?) and pipeline goals (how much opportunity value do you need?). The threshold should generate MQL volume matching sales bandwidth.

How does lead scoring integrate with attribution tracking?

Attribution data determines source-based point assignments. The channel, campaign, and content that generated the lead influences the initial score.

Integration requires attribution platforms syncing source fields to CRM lead records. Scoring rules then reference these fields: IF Lead Source = Paid Brand Search, ADD 50 points.

Without attribution integration, all leads default to generic sources (Direct, Unknown), eliminating the ability to weight high-intent channels appropriately. This gap typically costs 15-20% scoring accuracy.

What’s the difference between lead scoring and account scoring?

Lead scoring evaluates individual contacts based on their attributes and behaviors. Account scoring assesses entire organizations by aggregating multiple contact scores, firmographic fit, and account-level engagement.

Use lead scoring for transactional sales where individual buyers make purchase decisions. Use account scoring for enterprise sales requiring multiple stakeholder engagement and committee-based buying.

Many B2B organizations implement both—account scores identify target companies, individual lead scores within those accounts determine which contacts to prioritize for outreach.

How often should I recalibrate my scoring model?

Review scoring effectiveness quarterly by analyzing MQL-to-SQL conversion rates, sales acceptance rates, and closed-won correlations.

Adjust point values when specific factors stop predicting conversions or new high-correlation behaviors emerge. Market changes, product evolution, and competitive dynamics shift buyer behavior—scoring must adapt.

Predictive machine learning models recalibrate automatically as new conversion data feeds training datasets. Rules-based models require manual quarterly audits and annual comprehensive overhauls.

Should I use positive scoring only, or include negative scoring?

Implement aggressive negative scoring to filter poor-fit prospects. Positive-only models allow mismatched leads to accumulate points through behavioral engagement alone.

Negative scoring prevents competitors (−50 points), students (−30), personal emails (−30), and off-ICP industries (−20) from ever reaching MQL status regardless of website activity.

This maintains pipeline quality and prevents sales from wasting cycles on contacts who will never convert. Better to under-route borderline prospects than flood sales with disqualified leads.

How do predictive scoring models differ from rules-based systems?

Rules-based scoring applies manual point assignments determined by marketing-sales collaboration. Every factor earns explicit point values (job title = +15, pricing page = +20) that remain static until manually updated.

Predictive scoring uses machine learning to analyze thousands of historical conversions, identifying patterns algorithmically. The model determines optimal weights for each factor and recalibrates continuously as new conversion data accumulates.

Predictive models require 1,000-2,000 historical conversions for reliable training. Organizations with sufficient data see 15-30% higher MQL-to-SQL conversion rates. Smaller companies lacking conversion volume should use rules-based approaches.

What role does lead scoring play in marketing attribution?

Lead scoring validates attribution model effectiveness by showing which sources and campaigns generate the highest-quality leads.

Compare average lead scores by channel. If organic search delivers leads averaging 68 points while content syndication averages 32 points, attribution reveals organic deserves higher budget allocation.

Score-based attribution analysis calculates revenue potential by channel: (Average Lead Score × Lead Volume × Historical Conversion Rate). This framework prioritizes channels producing both volume and quality rather than optimizing for volume alone.