TL;DR:

- Machine learning algorithms analyze historical conversion data to rank prospects by likelihood to close, eliminating subjective scoring biases that plague traditional point systems.

- Companies implementing AI-powered scoring achieve 75% higher conversion rates than manual methods, with 98% of sales teams reporting improved lead prioritization accuracy.

- Integration with attribution platforms creates closed-loop intelligence where predictive models continuously refine based on actual lead source performance and customer journey data.

What Is Predictive Lead Scoring?

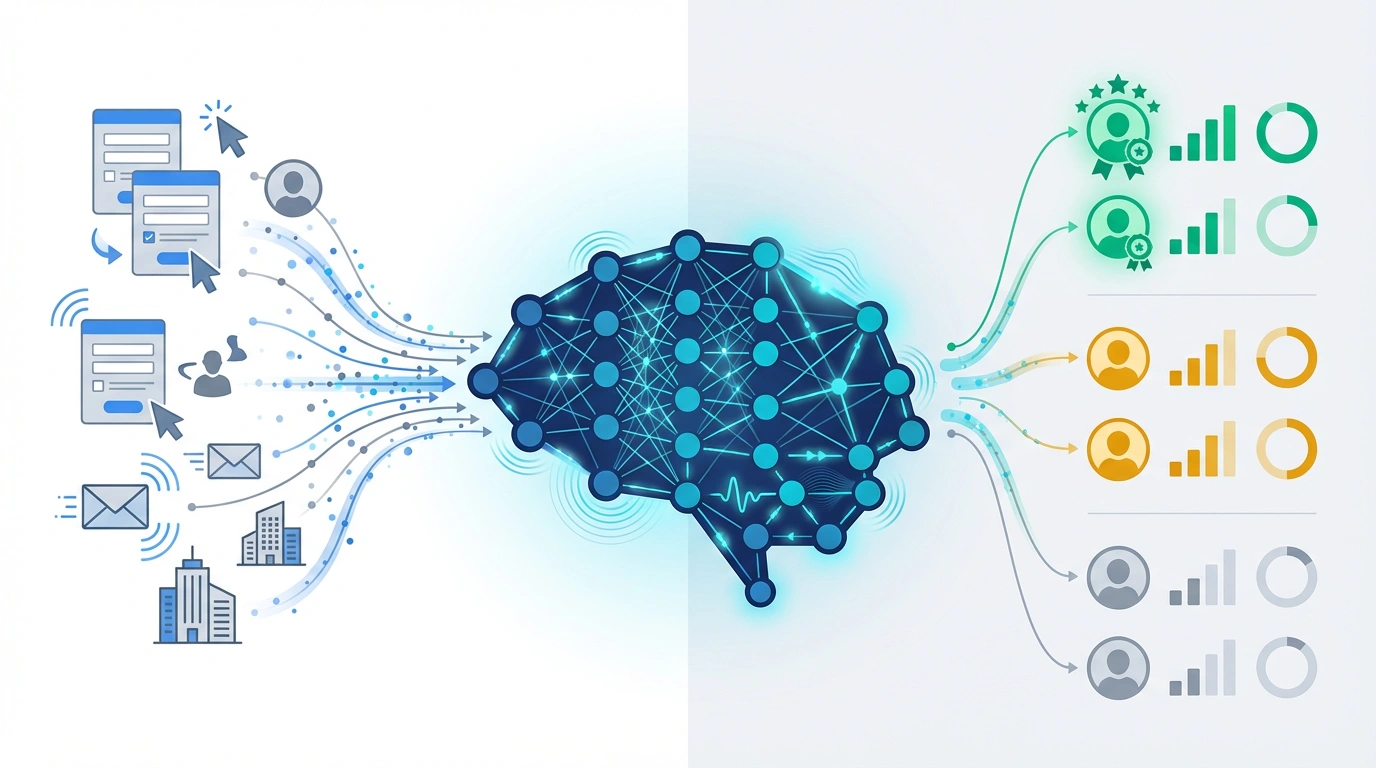

Predictive Lead Scoring applies machine learning algorithms to historical customer data, ranking each prospect with a conversion probability score based on behavioral patterns, firmographic attributes, and engagement signals.

Unlike traditional scoring that assigns arbitrary points for actions like email opens or whitepaper downloads, predictive models identify which combination of factors actually correlate with closed revenue.

The algorithms process millions of data points across dimensions including company size, technology stack, website behavior, email engagement, content consumption patterns, and previous customer profiles.

Models continuously retrain on new conversion outcomes, automatically adjusting scoring criteria as buyer behavior evolves and market conditions shift.

This creates a self-improving system where scoring accuracy compounds over time rather than degrading like static rule-based approaches.

Test LeadSources today. Enter your email below and receive a lead source report showing all the lead source data we track—exactly what you’d see for every lead tracked in your LeadSources account.

Core Algorithmic Approaches

Random Forest models dominate predictive scoring implementations due to their ability to handle non-linear relationships and feature interactions without overfitting.

These ensemble methods combine multiple decision trees, each trained on different data subsets, to produce consensus predictions that outperform single-model approaches.

Logistic Regression serves as the foundation for interpretable scoring where stakeholders need to understand why specific leads receive high or low scores.

The model outputs probability coefficients for each feature, making it straightforward to explain that a lead scored 85/100 because they match your ideal customer profile on seven key dimensions.

Neural Networks capture complex, multi-layered patterns in enterprise datasets exceeding 100,000 historical conversions.

Deep learning architectures excel when traditional algorithms plateau, especially for organizations with diverse product lines and segmented buyer personas.

Performance Benchmarks vs Traditional Methods

Companies implementing predictive scoring achieve 138% ROI on lead generation compared to 78% for those relying on manual point assignment systems.

The performance gap stems from three factors: elimination of recency bias, dynamic weighting adjustments, and discovery of non-obvious correlation patterns.

Traditional scoring assigns fixed points (e.g., +10 for demo request, +5 for pricing page visit) based on intuition rather than statistical validation.

Predictive models reveal counterintuitive insights like prospects who view pricing pages early actually convert 23% less than those who consume educational content first.

Conversion rate improvements average 75% when comparing machine learning to rules-based scoring, with top-performing implementations reaching 6% close rates versus the 2-3% industry baseline.

Gartner research documents 25% average lift in conversion rates across organizations deploying AI-powered qualification, with payback periods under four months.

Sales velocity increases 40% as reps focus exclusively on statistically validated opportunities rather than chasing leads that superficially appear qualified.

Data Infrastructure Requirements

Effective predictive models require minimum 1,000 historical conversions to establish statistically significant patterns, though 5,000+ conversions produce enterprise-grade accuracy.

Organizations below this threshold should implement traditional scoring while accumulating training data, then transition to predictive methods once sample size supports reliable modeling.

Feature engineering transforms raw CRM data into model-ready inputs across five categories: firmographics, technographics, engagement metrics, journey signals, and temporal patterns.

Firmographic variables include revenue band, employee count, industry classification, geographic market, and growth trajectory indicators.

Technographic data captures technology stack composition, revealing buying propensity based on existing software investments and integration requirements.

Engagement scoring aggregates email opens, link clicks, content downloads, webinar attendance, and sales call participation into composite interaction metrics.

Journey signals track progression through awareness, consideration, and decision stages based on page visit sequences, content topic consumption, and channel touchpoint patterns.

Temporal features measure velocity metrics like days from first touch to MQL, session frequency patterns, and engagement intensity acceleration or deceleration.

Attribution Integration Architecture

Predictive scoring accuracy depends directly on lead source data quality since algorithms use channel attribution as a primary training feature.

Models discover that leads from certain sources (e.g., industry-specific webinars) convert at 8x rates compared to others (e.g., generic content syndication), automatically weighting scores accordingly.

Integration with multi-touch attribution platforms creates feedback loops where scoring models inform budget allocation and attribution data refines scoring criteria.

This symbiotic relationship means organizations with sophisticated attribution tracking achieve 34% higher predictive accuracy than those relying on basic last-touch data.

API architecture enables real-time score updates as prospects engage, triggering automated workflows when leads cross defined threshold values.

Bi-directional CRM syncs ensure models train on complete revenue outcomes including deal size, sales cycle length, and customer lifetime value beyond binary won/lost status.

Leading implementations incorporate offline conversion data through CRM integrations, connecting online journey tracking with in-person event attendance and direct sales interactions.

ROI Quantification Framework

Calculate predictive scoring impact using this formula: ROI = [(Incremental Revenue × Conversion Lift) – Implementation Cost] / Implementation Cost × 100.

For an organization generating 10,000 MQLs annually at $500K total revenue, a conservative 20% conversion lift yields $100K incremental revenue against typical $15K annual platform costs, producing 567% first-year ROI.

The calculation compounds when factoring sales productivity gains from reduced time wasted on low-probability leads.

If your average AE spends 30% of their time on leads scoring below 50/100, reallocating that capacity to high-score opportunities delivers effectively 43% more selling hours without headcount additions.

CAC reduction averages 15-20% as marketing dollars concentrate on channels and campaigns that produce statistically higher-scoring leads.

Organizations tracking full-funnel attribution discover certain tactics generate volume without quality, while others produce fewer but dramatically higher-converting prospects.

Implementation Roadmap

Phase 1 (Months 1-2): Data audit and enrichment to ensure CRM contains complete conversion history, lead source attribution, and engagement tracking across all touchpoints.

Establish data governance protocols addressing duplicate records, standardized field definitions, and historical data cleaning to remove outliers and anomalies.

Phase 2 (Month 3): Feature engineering and model training using 70% of historical data for algorithm development and 30% held back for validation testing.

Run multiple algorithm types in parallel (random forest, gradient boosting, logistic regression) to benchmark performance and select optimal approach for your dataset characteristics.

Phase 3 (Month 4): Pilot deployment scoring new leads in parallel with existing qualification processes to validate prediction accuracy before full cutover.

Compare model recommendations against sales team intuition and traditional scores to build stakeholder confidence and identify edge cases requiring refinement.

Phase 4 (Month 5+): Production deployment with automated workflows triggering based on score thresholds, plus continuous model retraining on quarterly or monthly cadences.

Establish governance committees reviewing model performance metrics, feature importance rankings, and stakeholder feedback to guide ongoing optimization.

Model Governance and Maintenance

Concept drift occurs when market conditions, buyer behavior, or competitive dynamics change the features that predict conversion, degrading model accuracy over time.

Leading teams monitor prediction calibration monthly, comparing actual conversion rates for each score band (0-20, 21-40, 41-60, 61-80, 81-100) against expected rates.

When observed conversion rates deviate more than 15% from predictions, trigger model retraining using recent historical data weighted more heavily than older conversions.

Feature importance analysis reveals which attributes drive scoring decisions, identifying when models over-rely on single variables or ignore newly relevant signals.

Document scoring criteria changes in decision logs, creating audit trails that explain why a lead received different scores at different points as models evolve.

Bias testing ensures models don’t inadvertently discriminate based on protected characteristics or introduce systematic errors favoring certain segments over others.

Frequently Asked Questions

How many historical conversions do I need before implementing predictive scoring?

You need minimum 1,000 closed deals for basic model reliability, though 5,000+ conversions enable sophisticated multi-variable analysis.

Organizations with fewer conversions should start with rules-based scoring while accumulating training data, then transition to predictive methods once sample size supports statistical significance.

The 1,000-conversion threshold assumes relatively consistent buyer personas; highly diverse customer bases require larger datasets to identify patterns across segments.

What’s the difference between predictive scoring and traditional lead scoring?

Traditional scoring assigns fixed point values based on subjective assessments (e.g., +10 for email open, +25 for demo request) while predictive models use machine learning to discover which combinations of factors actually correlate with revenue.

Predictive systems automatically adjust scoring criteria as new conversion data reveals changing patterns, whereas manual scoring requires periodic stakeholder reviews to update point assignments.

The accuracy gap typically produces 75% higher conversion rates for predictive approaches compared to rules-based systems.

How does predictive scoring integrate with existing marketing automation platforms?

Most predictive platforms connect via bi-directional API integrations with Salesforce, HubSpot, Marketo, and Pardot to sync lead data, engagement history, and conversion outcomes.

Scores flow into CRM lead records as custom fields, triggering automated workflows based on threshold values (e.g., auto-route leads scoring 80+ to SDRs within 5 minutes).

Integration also pulls attribution data showing which campaigns, channels, and touchpoints generated each lead to train models on source quality patterns.

Can predictive models account for account-based marketing strategies where multiple contacts influence single deals?

Advanced implementations aggregate contact-level scores to account scores, weighting by role seniority and engagement intensity to predict account-level conversion probability.

The models identify buying committee patterns, recognizing that deals with C-suite engagement plus champion-level product usage signal 4x higher close rates than single-threaded opportunities.

ABM-optimized scoring requires training data tagged with account-level outcomes rather than individual contact conversions.

What accuracy rates should I expect from predictive lead scoring models?

Enterprise-grade models achieve 85-92% classification accuracy when predicting which leads will convert within 90 days, measured using held-out test datasets.

The top score decile (leads scoring 91-100) typically converts at 6-15x the rate of bottom decile (0-10 scores), creating clear prioritization guidance for sales teams.

Accuracy depends heavily on data quality; organizations with clean attribution tracking and complete engagement history achieve 20-30% better performance than those with fragmented data.

How often should predictive scoring models be retrained?

Quarterly retraining suffices for stable markets, while organizations in rapidly evolving industries or experiencing significant growth should retrain monthly.

Monitor model calibration by comparing predicted vs. actual conversion rates for each score band; when deviation exceeds 15%, trigger immediate retraining regardless of schedule.

Automate retraining pipelines so models continuously incorporate new conversion outcomes without manual data science intervention.

What’s the typical ROI timeline for predictive lead scoring implementation?

Organizations achieve positive ROI within 3-6 months as improved conversion rates and sales productivity gains offset platform and implementation costs.

The 138% ROI benchmark reflects first-year performance; returns compound over time as models accumulate more training data and teams optimize workflows around scoring insights.

Calculate your specific ROI using: [(Incremental Revenue from Conversion Lift) – (Platform Cost + Implementation Cost)] / Total Investment × 100.