TL;DR:

- Statistical prediction techniques assign probability scores forecasting specific customer actions (purchase, churn, engagement) with 70-90% accuracy, eliminating spray-and-pray marketing approaches.

- Organizations implementing propensity models achieve 30% higher conversion rates and 25% lower CAC by concentrating budget on high-probability prospects rather than uniform targeting across undifferentiated audiences.

- Attribution data serves as model training foundation, with lead source performance, journey complexity patterns, and channel touchpoint sequences creating the feature sets that predict future behavior based on historical conversion correlations.

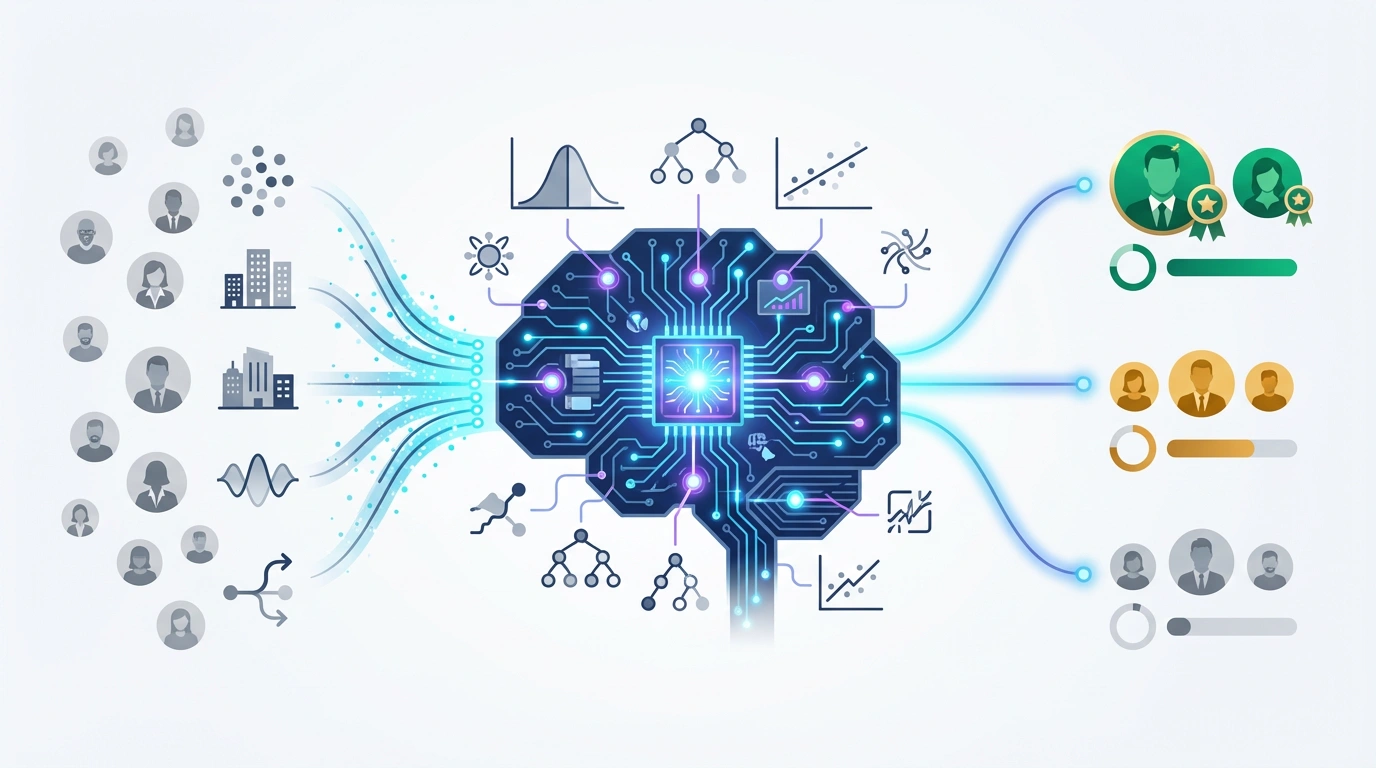

What Is Propensity Modeling?

Propensity Modeling applies statistical and machine learning algorithms to historical customer data, generating probability scores that predict the likelihood individual prospects will complete specific actions including purchase, subscription, churn, or engagement within defined timeframes.

Unlike rules-based scoring that assigns fixed point values to observed behaviors, propensity models discover non-obvious correlation patterns across hundreds of variables to identify mathematical relationships between past customer attributes and future actions.

Enterprise implementations track multiple simultaneous propensities per customer—purchase propensity (72% likely within 30 days), churn propensity (18% risk within 90 days), upsell propensity (45% receptive to expansion), and engagement propensity (63% email responsive)—enabling coordinated multi-objective strategies.

The methodology requires minimum viable datasets of 5,000-10,000 historical conversions to establish statistical significance, with accuracy improving proportionally to sample size as models detect increasingly subtle signals distinguishing converters from non-converters.

Model outputs inform resource allocation decisions by quantifying expected return from targeting each scored segment, calculating that pursuing prospects scoring 80+ yields $347 LTV versus $89 for 40-60 scores, fundamentally reorienting budget deployment toward mathematically validated opportunities.

Test LeadSources today. Enter your email below and receive a lead source report showing all the lead source data we track—exactly what you’d see for every lead tracked in your LeadSources account.

Core Model Types and Applications

Purchase Propensity Models predict conversion likelihood based on browsing patterns, engagement velocity, firmographic fit, technology stack alignment, and historical source performance patterns that correlate with closed revenue.

These models enable surgical campaign targeting where email sequences, ad retargeting, and sales outreach concentrate exclusively on prospects scoring above empirically-determined threshold values (typically 65-75+ percentile) where cost-per-acquisition economics justify investment.

Churn Propensity Models identify existing customers exhibiting usage decay, support ticket escalation, pricing page visits, competitor research signals, and engagement drop-off patterns preceding cancellation decisions 60-90 days before actual churn events.

Early warning systems trigger proactive retention workflows when accounts cross 25-40% churn probability thresholds, deploying customer success interventions, loyalty incentives, or executive relationship-building before problems metastasize into irreversible dissatisfaction.

Upsell/Cross-Sell Propensity models analyze product usage breadth, feature adoption depth, team expansion patterns, and integration implementations signaling readiness for premium tier migrations or complementary product additions.

The scoring directs account managers toward expansion-ready customers (70%+ propensity) rather than indiscriminately pitching entire customer bases, improving upsell acceptance rates 3-5x while reducing negative friction from premature or misaligned offers.

Lead Quality Propensity models evaluate new prospects immediately at capture, predicting which will progress to MQL, SQL, and closed revenue based on origination source, form completion behavior, firmographic attributes, and technographic compatibility.

Real-time scoring enables dynamic routing where high-propensity leads (75%+) receive instant SDR follow-up while low-propensity prospects (below 40%) enter extended nurture sequences or disqualification workflows, optimizing human capacity allocation toward mathematically probable opportunities.

Algorithm Selection and Methodology

Logistic Regression serves as the foundational propensity modeling technique, producing interpretable probability curves showing how each variable influences likelihood of the target behavior occurring.

The method’s transparency enables stakeholder understanding where sales teams see that “prospects from partner referrals with 3+ website sessions convert at 42% versus 8% for cold display ads with single sessions,” building model trust through explainable predictions.

Decision Tree and Random Forest algorithms handle non-linear relationships and complex interaction effects that logistic regression misses, particularly valuable when multiple variables combine to create exponential impact rather than additive effects.

Random forests aggregate 100-500 individual decision trees trained on different data subsets, achieving 8-12% higher prediction accuracy than single models while preventing overfitting that plagues simpler approaches on sparse datasets.

Gradient Boosting (XGBoost, LightGBM) represents current state-of-art for propensity prediction, iteratively building models that correct predecessor errors, achieving 85-92% classification accuracy in well-instrumented B2B environments with rich historical data.

These ensemble methods automatically discover interaction patterns like “enterprise prospects from organic search with 5+ content downloads and Salesforce usage convert at 67% versus baseline 12%,” surfacing insights analysts wouldn’t manually hypothesize.

Neural Networks tackle extreme complexity scenarios with 500+ input features and millions of training examples, though implementation requires specialized data science expertise and substantial computational infrastructure versus lighter-weight alternatives.

Deep learning approaches excel when behavior prediction depends on subtle sequential patterns like “prospects who view pricing then case studies then integrations in that specific order convert 3.8x baseline,” detecting temporal dynamics simpler models miss.

Feature Engineering for Model Performance

Firmographic Variables establish baseline compatibility scoring through company size bands, industry classifications, revenue estimates, geographic markets, growth trajectories, and organizational maturity indicators that filter fundamental fit.

These explicit attributes typically explain 30-40% of conversion variance as predictors, with remaining 60-70% coming from behavioral and contextual signals that distinguish high-intent from low-intent prospects within acceptable firmographic profiles.

Behavioral Engagement Metrics capture interaction intensity through page visit frequency, session duration, content topic consumption, email click patterns, webinar attendance, and feature trial activation measuring active interest versus passive awareness.

Velocity calculations tracking engagement acceleration (3 visits this week versus 1 last month) outperform absolute volume metrics as leading indicators, since upward trajectory signals growing intent while static high engagement may represent research without buying authority.

Technographic and Intent Data append external signals showing technology stack composition, competitive platform usage, category research activity, and third-party content consumption patterns that correlate with near-term purchasing windows.

Prospects currently using competitor platforms while demonstrating category intent achieve 4-6x higher propensity scores than similar firmographic profiles without these contextual overlays, justifying enrichment investment despite per-lead costs.

Attribution and Source Features incorporate lead origination channel, multi-touch journey complexity, campaign engagement history, and historical source-to-conversion performance creating temporal context for current behavior interpretation.

Models learn that webinar attendees scoring 65 actually convert at 52% rates while paid search leads scoring 75 convert at 31%, automatically adjusting probability calculations based on channel quality patterns invisible to generic scoring frameworks.

Training Data Requirements and Quality

Minimum Sample Size thresholds require 5,000-10,000 historical conversion examples for basic model reliability, with enterprise-grade accuracy demanding 25,000+ observations enabling detection of subtle multivariate interaction effects.

Organizations below these thresholds should implement rules-based lead scoring while accumulating training data, transitioning to propensity modeling once statistical significance supports confidence intervals under 5% margin of error.

Class Balance Considerations address scenarios where target behaviors represent 2-5% of total observations (e.g., 500 conversions from 20,000 leads), requiring specialized techniques like SMOTE oversampling or class weighting preventing models from defaulting to “always predict no conversion.”

Imbalanced datasets produce deceptive accuracy metrics where models achieving “95% accuracy” simply predict nobody converts, necessitating precision-recall curves and F1 scores evaluating actual positive prediction performance.

Feature Completeness Standards mandate 85%+ data population across critical variables, with missing values introducing noise degrading prediction accuracy particularly when absence patterns correlate with outcome variables creating systematic bias.

Enrichment APIs, progressive profiling, and retroactive data appends address gaps in firmographic, technographic, and engagement history preventing model training on incomplete representations that produce unreliable production scores.

Temporal Consistency ensures training data reflects current market conditions rather than outdated buyer behavior patterns, requiring recency weighting where last 90-180 days receives 3-5x importance versus 12+ month old conversions in fast-evolving categories.

Quarterly model retraining cycles maintain relevance as economic conditions, competitive landscapes, and marketing mix evolution fundamentally alter which signals predict conversion, with stale models losing 15-25% accuracy annually without refresh.

Model Evaluation and Calibration

Accuracy Metrics Beyond Basic Rates employ receiver operating characteristic (ROC) curves measuring true positive versus false positive trade-offs across different score thresholds, with area under curve (AUC) scores above 0.75 indicating useful predictive power.

High-performing propensity models achieve 0.80-0.90 AUC scores in controlled environments, translating to 2.5-4x conversion rate improvement when targeting top-scoring quintile versus random prospect selection across entire database.

Calibration Assessment validates whether predicted probabilities match observed outcome rates by comparing prospects scored 60-70% actually converting at 65% rates versus miscalibrated models where 70% scores convert at 45% undermining trust.

Calibration plots revealing systematic over-prediction (scores too high) or under-prediction (scores too low) require recalibration techniques adjusting probability outputs while maintaining rank ordering that prioritizes highest-propensity prospects appropriately.

Lift Analysis quantifies improvement versus baseline random targeting by calculating how many more conversions the top-scoring decile produces compared to average population conversion rates, with 3-5x lift considered strong performance.

Organizations achieving 4x lift on 70+ scored prospects can justify 4x higher acquisition spend on this segment before economics deteriorate to break-even, fundamentally redefining efficient frontier of customer acquisition cost curves.

Holdout Testing reserves 20-30% of historical data excluded from model training to validate prediction accuracy on never-before-seen examples, preventing overfitting where models memorize training data rather than generalizing underlying behavior patterns.

Production deployment requires holdout validation demonstrating less than 10% accuracy degradation versus training performance, with larger gaps indicating model complexity exceeds dataset size justifying simpler algorithm selection.

Integration with Marketing Operations

CRM Score Fields store propensity predictions as custom fields updated nightly or weekly via API integrations, enabling segmentation, workflow automation, and territory assignment rules leveraging mathematical likelihood rather than subjective rep judgment.

Automated routing sends 80+ scored leads to senior closers while 40-60 scores enter nurture sequences and below-40 leads trigger disqualification workflows, systematically matching prospect quality to appropriate resource intensity.

Marketing Automation Triggers activate propensity-based campaigns where high-score thresholds initiate aggressive multi-channel outreach (email sequences, retargeting ads, direct mail, SDR calls) while low scores receive passive content nurturing.

Dynamic segmentation refreshes daily ensure prospects receive appropriate messaging intensity as scores evolve, with engagement behavior influencing score updates creating feedback loops where response to campaigns signals strengthening or weakening propensity.

Ad Platform Audience Syncing exports high-propensity segments to Google, Meta, and LinkedIn enabling lookalike modeling based on prospects most likely to convert rather than total lead lists including low-quality contacts diluting algorithmic learning.

Seed audiences composed exclusively of 75+ scored prospects produce lookalike pools achieving 35-50% higher conversion rates than audiences built from entire databases containing majority poor-fit contacts confusing platform algorithms.

Sales Intelligence Overlays surface propensity scores within rep workflows at moment of outreach, prioritizing call lists by mathematical conversion probability and triggering urgency alerts when high-scoring accounts exhibit time-limited buying windows.

Integration with conversational intelligence platforms captures discovery call insights automatically updating scores based on qualification criteria mentions, creating closed-loop refinement where human judgment validates and improves algorithmic predictions.

Attribution Data as Model Foundation

Channel Performance Patterns create predictive features where leads from historically high-converting sources (42% partner webinars, 38% organic search) receive elevated propensity scores versus low-converters (7% display ads, 12% content syndication) with identical engagement.

This source-quality intelligence prevents models from treating all leads equally, automatically incorporating institutional knowledge that certain acquisition channels systematically produce superior or inferior prospect quality regardless of surface-level behaviors.

Journey Complexity Signals demonstrate prospects requiring 8+ touchpoints across 4+ channels before converting exhibit different characteristics than single-session conversions, with multi-touch journeys correlating with 35% higher propensity among enterprise segments.

Attribution data capturing complete paths enables temporal feature engineering like “days since first touch,” “number of return sessions,” and “cross-channel engagement breadth” that predict conversion timing beyond static demographic attributes.

Touchpoint Sequence Patterns reveal specific journey progressions (awareness content → case study → pricing → demo request) that signal high intent, with models learning these sequential dependencies invisible to scoring systems treating interactions as independent events.

Markov chain analysis of attribution data identifies transition probabilities between touchpoints, automatically discovering that prospects viewing integrations page after pricing convert at 58% versus 19% when sequence reverses, informing propensity calculations.

Source-Behavior Interaction Effects uncover that identical engagement levels produce vastly different conversion probabilities depending on origination, where webinar attendees with 3 content downloads convert at 47% versus paid social leads with same engagement converting at 9%.

These interaction discoveries justify attribution investment since models without channel context systematically misevaluate prospects, overscoring low-quality sources showing artificial engagement inflation while undervaluing high-quality sources with conservative interaction patterns.

ROI and Business Impact

Conversion Rate Improvement averaging 25-35% emerges from targeting concentration where campaigns exclusively pursue 70+ scored prospects versus undifferentiated mass outreach, with top-performing organizations achieving 50%+ lift through aggressive threshold discipline.

The mathematics prove compelling: 1,000 leads at 5% baseline conversion yields 50 customers, while propensity models identifying 300 high-score leads at 15% conversion produces 45 customers from 70% less outreach volume and associated cost.

CAC Reduction of 20-30% results from eliminating wasted spend pursuing low-propensity prospects who consume marketing resources without converting, reallocating budget toward mathematically validated high-probability opportunities.

Organizations tracking cost-per-SQL by propensity band discover 80+ scored leads cost $247 versus $863 for 40-60 scores due to faster progression and higher qualification rates, justifying differential investment intensity across scored segments.

Churn Prevention ROI delivers 5-8x return when early warning models enable proactive retention interventions 60-90 days before cancellation, with saved revenue from prevented churn exceeding program costs by orders of magnitude.

The calculation proves powerful: preventing 100 churns among $50K ACV customers through $500 intervention costs yields $5M retained revenue against $50K program spend, producing 100:1 return impossible through acquisition-focused investments.

Sales Productivity Gains of 35-45% occur when propensity-based routing eliminates rep time wasted on low-probability prospects, concentrating discovery effort on high-score leads where qualification and progression occur 3-4x faster than database average.

The capacity unlocking enables 40% quota growth without headcount additions as reps pursue 2.5x more qualified opportunities within existing working hours, directly flowing to revenue expansion through productivity multiplication.

Frequently Asked Questions

How accurate are propensity models compared to traditional lead scoring?

Propensity models achieve 70-90% prediction accuracy measured by AUC scores, representing 2-4x improvement versus rules-based scoring systems that typically produce 50-60% accuracy equivalent to slightly-better-than-random guessing.

The accuracy advantage stems from discovering multivariate correlation patterns across hundreds of variables that human-designed rules miss, with machine learning automatically detecting that specific combinations of source+behavior+firmographics produce exponential conversion probability differences.

Organizations implementing propensity models report 25-35% conversion rate improvements and 3-5x lift in top-scoring deciles versus baseline random targeting, validating material business impact beyond academic accuracy metrics.

What’s the minimum data requirement to build a reliable propensity model?

Minimum viable propensity modeling requires 5,000-10,000 historical conversion examples to establish statistical significance, though enterprise-grade accuracy demanding 85%+ AUC scores typically needs 25,000+ conversions with complete attribute data.

Organizations below these thresholds should implement traditional lead scoring while accumulating training data, transitioning to propensity approaches once sample size supports confidence intervals under 5% error margins on prediction accuracy.

Beyond raw volume, data quality matters critically—models require 85%+ population rates across key variables since missing values introduce noise degrading predictions, with incomplete records often requiring enrichment investment before modeling viability.

How does propensity modeling integrate with attribution tracking systems?

Attribution data serves as foundational model training input by providing lead source classification, journey touchpoint sequences, channel engagement patterns, and campaign interaction history that become predictive features in propensity algorithms.

Models learn that certain sources (partner referrals converting at 42%) systematically produce higher-propensity prospects than others (display ads at 7%) regardless of engagement scores, automatically incorporating channel quality intelligence into probability calculations.

Integration creates feedback loops where propensity scores inform budget allocation across channels based on predicted conversion likelihood, while attribution measurement validates which sources actually produced high-scoring prospects justifying continued investment.

What algorithms work best for B2B propensity modeling?

Gradient boosting methods (XGBoost, LightGBM) currently deliver optimal B2B propensity prediction with 85-92% accuracy by automatically discovering complex interaction effects and non-linear relationships that simpler algorithms miss.

Logistic regression remains valuable for explainability when stakeholders require transparent coefficient interpretation showing exactly how each variable influences propensity scores, though accuracy typically trails ensemble methods by 8-15 percentage points.

Algorithm selection depends on dataset characteristics—organizations with 50,000+ conversions and complete feature data benefit from neural networks, while smaller datasets under 10,000 examples perform better with regularized logistic regression or random forests preventing overfitting.

How often should propensity models be retrained to maintain accuracy?

Quarterly retraining maintains model relevance as market conditions, competitive dynamics, and buyer behavior evolve, with studies showing 15-25% annual accuracy degradation for static models never refreshed against new conversion data.

Fast-moving categories experiencing rapid change (technology, financial services) require monthly retraining while stable industries (manufacturing, construction) tolerate semi-annual cycles without material accuracy loss.

Automated monitoring tracking prediction accuracy against actual outcomes should trigger immediate retraining when performance degrades 10%+ below baseline, indicating systematic drift requiring model recalibration rather than waiting for scheduled refresh cycles.

Can propensity models predict multiple behaviors simultaneously?

Enterprise implementations track 3-5 simultaneous propensities per customer (purchase, churn, upsell, engagement, referral) enabling coordinated multi-objective strategies rather than single-dimension optimization creating unintended trade-offs.

Multi-model architectures require careful coordination ensuring models don’t produce conflicting recommendations like simultaneously predicting high purchase propensity and high churn risk, necessitating hierarchy rules determining priority when scores diverge.

The computational approach trains separate specialized models for each target behavior rather than single multi-output models, achieving higher accuracy through dedicated optimization while enabling independent refresh cycles as different behaviors require different retraining frequencies.

What ROI should organizations expect from propensity modeling implementation?

Organizations implementing propensity modeling achieve 25-35% conversion rate improvements and 20-30% CAC reduction within 6-12 months, with payback periods averaging 4-8 months for mid-market companies and 8-16 months for enterprises requiring extensive data infrastructure buildout.

ROI calculations should include both efficiency gains (reduced wasted spend on low-propensity prospects) and effectiveness improvements (higher conversion from targeting concentration), with combined impact typically producing 200-400% first-year returns.

Churn prevention models deliver particularly compelling economics with 5-8x returns as saved customer lifetime value from prevented cancellations dwarfs program costs, though purchase propensity models driving acquisition represent larger absolute revenue impact for growth-stage organizations.